Multioutput CNN in PyTorch

PyTorch is an open source deep learning research platform/package which utilises tensor operations like NumPy and uses the power of GPU. It was developed by Facebook’s AI research group and is now widely used for machine learning. In this article, I want to concentrate on Multioutput CNN (Convolutional Neural Network) analysis in PyTorch. There are many guidelines on how to use PyTorch for deep learning analysis. However, as far as I know, Multioutput CNN in PyTorch is one of the less mentioned topics and I want to fill this gap with this article.

This article has been published for non-commercial knowledge-sharing purposes and the content of this article belongs to the author only; they do not necessarily reflect the views of JDSC (Japan Data Science Consortium). The aligned and cropped images have been obtained from UTKFace. The copyright belongs to the original owners. If any of the images belongs to you, please let the author know and the images will be removed from the article immediately.

Note: this guide is primarily for data-scientists/data-engineers who have sufficient knowledge in PyTorch. If you do not have enough knowledge, you can learn PyTorch from PyTorch.org tutorials. Furthermore, I can recommend an excellent course in Coursera called “Deep Neural Networks in PyTorch”.

Task description and Dataset overview

As for the dataset for the preceding analysis in PyTorch, imagine you have 2000 images of people (you can download these images from UTKFace).

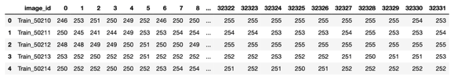

In order to save space, these images have been digitalized and transferred to parquet file in the following form:

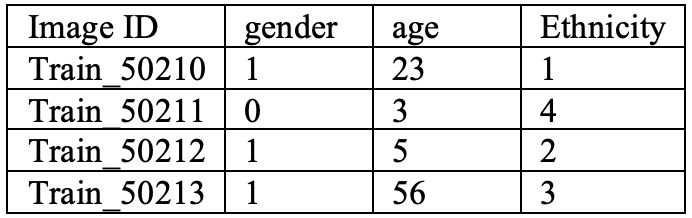

Your task is to classify these images by finding gender, ethnicity and age of the people. In other words, your model should take one input (image) and produce multiple outputs (age, gender and ethnicity). Furthermore, for your model training, you have been given ‘train.csv’ which provides you with age, ethnicity and gender data for each image you have:

where 1=male and 0=female in gender column. As for ethnicity, there are four groups: 1=European, 2=African, 3=Asian and 4=Other. You should merge these two datasets, train_image.parquet and train.csv, and conduct CNN analysis.

CNN analysis

Importing libraries

# General libraries

import pandas as pd #For working with dataframes

import numpy as np #For working with image arrays

import cv2 #For transforming image

import matplotlib.pyplot as plt #For representation#For model building

import torch

from torch import nn, optim

import torchvision

from torchvision import transforms, datasets, models, utils

from torch.utils.data import Dataset, DataLoader

from PIL import Image

from sklearn.model_selection import train_test_split

from torch.nn import functional as F

from skimage import io, transform

from torch.optim import lr_scheduler

from skimage.transform import AffineTransform, warp

torch library is the main library for the PyTorch analysis. With the help of “nn”, we can build custom models and “optim” enables us to use Gradient Descent functions like Adam and SGD. With regards to the remaining libraries such as torchvision, PIL and skimage, we will use them for data transformations and building data loading pipelines.

Loading data using Dataset and DataLoader

torch.utils.data.Dataset and torch.utils.data.DataLoader enables you to import, transform your data and send this data to your model. In other words, you can build an excellent data pipeline with these two classes.

First, we start with creating Dataset class:

class MyData(Dataset):

def __init__(self, train=True, transform=None):

#Loading train.csv

train_df=pd.read_csv('train.csv')

#Loading image data and merging with train.csv

df=pd.merge(pd.read_parquet('image_train_data.parquet'),\

train_df, on='image_id').drop(['image_id'], axis=1)

#Leaving only image related columns

feature=df.drop(['age', 'gender', 'race'], axis=1)

#Setting labels

label_age=df['age']

label_gender=df['gender']

label_race=df['race']

#Splitting the data into train and validation set

X_train, X_test, y_age_train, y_age_test, y_gender_train, y_gender_test, y_race_train,\

y_race_test = train_test_split(feature, label_age, label_gender, label_race, test_size=0.2)

if train==True:

self.x=X_train

self.age_y=y_age_train

self.gender_y=y_gender_train

self.race_y=y_race_train

else:

self.x=X_test

self.age_y=y_age_test

self.gender_y=y_gender_test

self.race_y=y_race_test

#Applying transformation

self.transform=transform

def __len__(self):

return len(self.x)

def __getitem__(self, idx):

image=np.array(self.x.iloc[idx, 0:]).astype(float).reshape(137, 236)

label1=np.array([self.age_y.iloc[idx]]).astype('float')

label2=np.array([self.gender_y.iloc[idx]]).astype('float')

label3=np.array([self.race_y.iloc[idx]]).astype('float')

sample={'image': np.uint8(image), 'label_age': label1,\

'label_gender': label2,\

'label_race': label3}

#Applying transformation

if self.transform:

sample=self.transform(sample)

return sampleIn __init__ function of the class, firstly, you download and merge the datasets. Afterwards, you define labels and features of the dataset. For evaluating your model, you can also split into train and test (also called validation) data. Then, you switch to __len__ which returns the size of the dataset. Finally, you should develop __getitem__ function so that the function returns indexed data from dataset. These three functions are important part for Dataset class and you should never forget these functions!!!

Before switching to DataLoader, you should decide whether to use data transformation or not. Transforming data helps you decrease bias, increase accuracy as well as the data size and improve model performance. If you want to use data transformation, you can easily use the following classes:

- Crop class:

class crop(object):

def __init__(self, resize_size):

self.resize_size = resize_size

def __call__(self, sample):

image, label1, label2, label3 = sample['image'],\

sample['label_age'], sample['label_gender'], sample['label_race']

_, thresh=cv2.threshold(image, 30, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

contours, _ = cv2.findContours(thresh,cv2.RETR_LIST,cv2.CHAIN_APPROX_SIMPLE)[-2:]

idx=0

ls_xmin=[]

ls_ymin=[]

ls_xmax=[]

ls_ymax=[]

for cnt in contours:

idx+=1

x,y,w,h = cv2.boundingRect(cnt)

ls_xmin.append(x)

ls_ymin.append(y)

ls_xmax.append(x + w)

ls_ymax.append(y + h)

xmin = min(ls_xmin)

ymin = min(ls_ymin)

xmax = max(ls_xmax)

ymax = max(ls_ymax)

roi = image[ymin:ymax,xmin:xmax]

resized_image = cv2.resize(roi, (self.resize_size, self.resize_size),\

interpolation=cv2.INTER_AREA)

sample={'image': resized_image, 'label_age': label1, 'label_gender': label2,\

'label_race': label3}

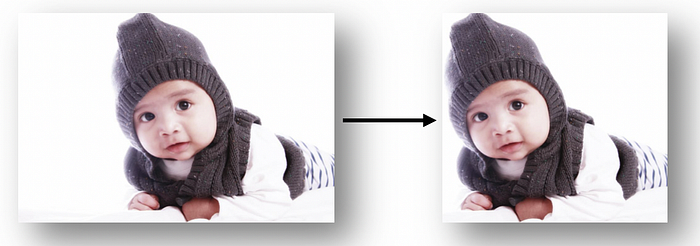

return sampleWith the help of above given “crop” class, you can transform you image by cropping it in the following way:

Cropping images helps your model concentrate more on important parts of the image and brings higher accuracy.

2. Rotate image class:

Second class rotates image and brings different types of data to your model. As a result, your model can see different types of images and this can also lead to higher accuracy.

class rotate_image(object):

def __call__(self, sample):

image, label1, label2, label3 = sample['image'],\

sample['label_age'], sample['label_gender'], sample['label_race']

min_scale = 0.8

max_scale = 1.2

sx = np.random.uniform(min_scale, max_scale)

sy = np.random.uniform(min_scale, max_scale)

# --- rotation ---

max_rot_angle = 7

rot_angle = np.random.uniform(-max_rot_angle, max_rot_angle) * np.pi / 180.

# --- shear ---

max_shear_angle = 10

shear_angle = np.random.uniform(-max_shear_angle, max_shear_angle) * np.pi / 180.

# --- translation ---

max_translation = 4

tx = np.random.randint(-max_translation, max_translation)

ty = np.random.randint(-max_translation, max_translation)

tform = AffineTransform(scale=(sx, sy), rotation=rot_angle, shear=shear_angle,

translation=(tx, ty))

transformed_image = warp(image, tform)

assert transformed_image.ndim == 2

sample={'image': resized_image, 'label_age': label1, 'label_gender': label2,\

'label_race': label3}

return sample3. Change to tensor class

Tensors are building blocks of neural networks in PyTorch. For the neural network, the input x should be a tensor, the output y is a tensor, the network will be comprised of a set of parameters which are also tensors. Therefore, we should change all features and labels to tensor. We can achieve this by using following class:

class RGB_ToTensor(object):

def __call__(self, sample):

image, label1, label2, label3 = sample['image'],\

sample['label_age'], sample['label_gender'], sample['label_race']

image=torch.from_numpy(image).unsqueeze_(0).repeat(3, 1, 1)

label1=torch.from_numpy(label1)

label2=torch.from_numpy(label2)

label3=torch.from_numpy(label3)

return {'image': image,

'label_age': label1,

'label_gender': label2,

'label_race': label3}Note: although you do need to use other transformations, you have to use ‘RGB_ToTensor’ class since models in PyTorch can only be developed with tensors.

4. Normalization class

class Normalization(object):

def __init__(self, mean, std):

self.mean = mean.view(-1, 1, 1)

self.std = std.view(-1, 1, 1)

def __call__(self, sample):

image, label1, label2, label3 = sample['image'],\

sample['label_age'], sample['label_gender'], sample['label_race']

return {'image': image,

'label_age': label1,

'label_gender': label2,

'label_race': label3}In PyTorch, transfer learning is widely used and this can significantly improve training time and accuracy. PyTorch has a page dedicated page to transfer learning. We will learn how to develop model with transfer learning in later sections. For now, you should know that in order to use transfer learning and also to increase the speed of your model training, you should use above given Normalisation class.

After creating your transform functions, you can now easily use DataLoader to deliver your loaded, transformed data to your model:

cnn_normalization_mean = torch.tensor([0.485, 0.456, 0.406])

cnn_normalization_std = torch.tensor([0.229, 0.224, 0.225])transformed_train_data = MyData(train=True, transform=transforms.Compose([crop(256),\

rotate_image(), RGB_ToTensor(),

Normalization(cnn_normalization_mean,\

cnn_normalization_std)]))transformed_test_data = MyData(train=False, transform=transforms.Compose([crop(256),\

RGB_ToTensor(),

Normalization(cnn_normalization_mean,\

cnn_normalization_std)]))train_dataloader = DataLoader(transformed_train_data, batch_size=50, shuffle=True, num_workers=4)

test_dataloader = DataLoader(transformed_test_data, batch_size=50, shuffle=True, num_workers=4)

Note that with the help of transforms.Compose, we can easily compose our created functions. Furthermore, normalization numbers for mean and std have been chosen based on the guides from PyTorch organization.

Creating a Multioutput CNN model

While building a model in PyTorch, you have two ways. First way is building your own custom model by using nn.Module or nn.Sequential. Second way is using nn.Module with pretrained models by just changing last layers of the pretrained model. The second option is mostly preferred in PyTorch community and therefore, I want to show the second option.

Before creating a model, we should import pretrainedmodels library:

import pretrainedmodelsNow, we create a multioutput CNN model by using resnet pretrained model:

import pretrainedmodels

class CNN1(nn.Module):

def __init__(self, pretrained):

super(CNN1, self).__init__()

if pretrained is True:

self.model = pretrainedmodels.__dict__["resnet34"](pretrained="imagenet")

else:

self.model = pretrainedmodels.__dict__["resnet34"](pretrained=None)

self.fc1 = nn.Linear(512, 100) #For age class

self.fc2 = nn.Linear(512, 2) #For gender class

self.fc3 = nn.Linear(512, 4) #For race class

def forward(self, x):

bs, _, _, _ = x.shape

x = self.model.features(x)

x = F.adaptive_avg_pool2d(x, 1).reshape(bs, -1)

label1 = self.fc1(x)

label2= torch.sigmoid(self.fc2(x))

label3= self.fc3(x)

return {'label1': label1, 'label2': label2, 'label3': label3}Using CUDA

torch.cuda is used to set up and run CUDA operations. In other words, you can easily transfer your model and data to CUDA and increase the speed of training your model. This is one of the advantages of using PyTorch!! In order to use CUDA, you can easily type following code:

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")By using above code, you can define your device as the first visible CUDA device if you have CUDA available and load your model, data to this device. If you do not have access to GPU, the device will be automatically switched to CPU.

Setting model and hyper-parameters

After building model, we will set the model and move it to device we defined earlier. In this case, if GPU is available, the model will be moved to GPU, if not, the model stays at CPU.

We also set hyper-parameters. Note that we have defined two types of criterion: one for binary output (in the case of gender) and one for multilabel outputs.

#Setting model and moving to device

model_CNN = CNN1(True).to(device)#For binary output:gender

criterion_binary= nn.BCELoss()#For multilabel output: race and age

criterion_multioutput = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

Creating training model function

The last function is to training function. In building training function, you should be careful in setting criterion for each output.

def train_model(model, criterion1, criterion2, optimizer, n_epochs=25):

"""returns trained model"""

# initialize tracker for minimum validation loss

valid_loss_min = np.Inf

for epoch in range(1, n_epochs):

train_loss = 0.0

valid_loss = 0.0

# train the model #

model.train()

for batch_idx, sample_batched in enumerate(train_dataloader):

# importing data and moving to GPU

image, label1, label2, label3 = sample_batched['image'].to(device),\

sample_batched['label_age'].to(device),\

sample_batched['label_gender'].to(device),\

sample_batched['label_race'].to(device)

# zero the parameter gradients

optimizer.zero_grad()

output=model(image)

label1_hat=output['label1']

label2_hat=output['label2']

label3_hat=output['label3']

# calculate loss

loss1=criterion1(label1_hat, label1.squeeze().type(torch.LongTensor))

loss2=criterion2(label2_hat, label2.squeeze().type(torch.LongTensor))

loss3=criterion1(label3_hat, label3.squeeze().type(torch.LongTensor))

loss=loss1+loss2+loss3

# back prop

loss.backward()

# grad

optimizer.step()

train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

if batch_idx % 50 == 0:

print('Epoch %d, Batch %d loss: %.6f' %

(epoch, batch_idx + 1, train_loss))

# validate the model #

model.eval()

for batch_idx, sample_batched in enumerate(test_dataloader):

image, label1, label2, label3 = sample_batched['image'].to(device),\

sample_batched['label_age'].to(device),\

sample_batched['label_gender'].to(device),\

sample_batched['label_race'].to(device)

output = model(image)

output=model(image)

label1_hat=output['label1']

label2_hat=output['label2']

label3_hat=output['label3']

# calculate loss

loss1=criterion1(label1_hat, label1.squeeze().type(torch.LongTensor))

loss2=criterion2(label2_hat, label2.squeeze().type(torch.LongTensor))

loss3=criterion1(label3_hat, label3.squeeze().type(torch.LongTensor))

loss=loss1+loss2+loss3

valid_loss = valid_loss + ((1 / (batch_idx + 1)) * (loss.data - valid_loss))

# print training/validation statistics

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch, train_loss, valid_loss))

## TODO: save the model if validation loss has decreased

if valid_loss < valid_loss_min:

torch.save(model, 'model.pt')

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model ...'.format(

valid_loss_min,

valid_loss))

valid_loss_min = valid_loss

# return trained model

return modelTraining

Finally, we train the model by using following code:

model_conv=train_model(model_CNN, criterion_multioutput, criterion_binary, optimizer)