Automating Terraform in GitHub Actions with self-host runner in AWS

Background

Recently, we have built a prototype environment in AWS for our application. The application is in the process of development and we are going to add additional features to it. There we faced an issue: for each additional feature, we had to add or change some of the AWS services. For example, adding Fargate services, container definitions or creating new Cloud Front with S3 buckets for the front of the new application feature. The main problem was that we had to manually add, remove or change services and it was costly in terms of time. Due to this reason, we automated our infrastructure-build by building Infra-CI-CD pipeline with Terraform and GitHub Actions which allow us to quickly respond to any demand for a change in infrastructure in AWS. In this article, I am going to build and present this CI-CD pipeline so that you can also easily build CI-CD for your infrastructure in AWS.

Introduction

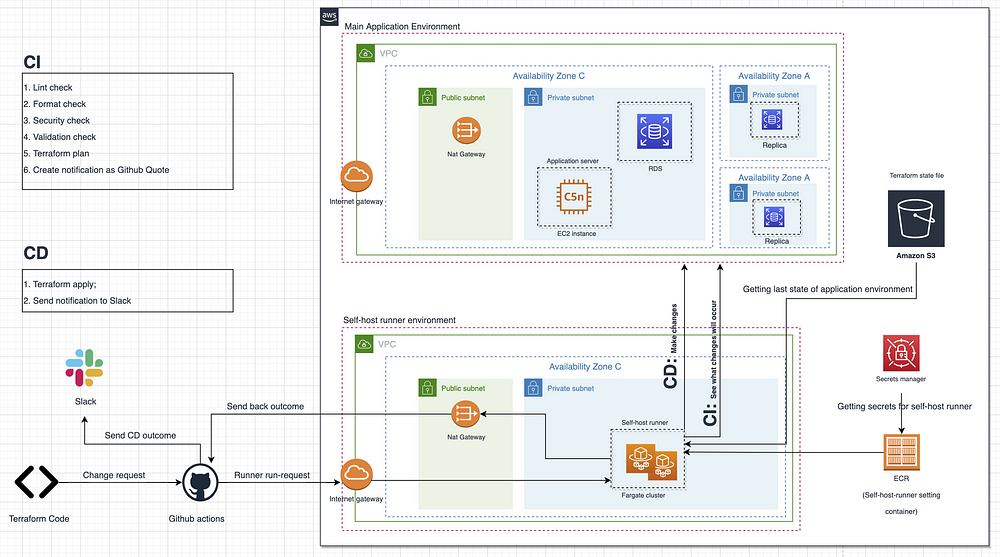

Figure 1 below represents Diagram of Infra-CI-CD we are going to build in this article. The diagram consists of following features:

Main application environment: this is the environment where our application runs. For simplicity, I only included some services including RDS and EC2 instance in Private Subnets. This infrastructure will be built with Terraform and will be target of our CI-CD pipeline: we are going to build CI-CD to change the services in this environment.

Self-host runner environment: this is the environment where our self-host runners for GitHub actions run and make changes to the application environment. Note that instead of self-host runners, you can also run default runners of GitHub Actions. However, in this case security problem arises: you should give this runner a very strong “AWS Admin role” in order to change services. Furthermore, in order to access AWS, this default runner should get AWS Secret Access Keys and IDs. I found that submitting these secrets and role to GitHub Actions and Runner is dangerous and decided to use self-host runners in AWS ECS with Admin Role which is located safely in private subnet under my control and get any secrets (e.g. github tokens) from Secrets Manager. If you want, you can reduce permissions for the runner. For example, if it is required to change ECS, you can give the runner only ECS Admin.

This environment for self-host runners is also built with Terraform which I am going to show later.

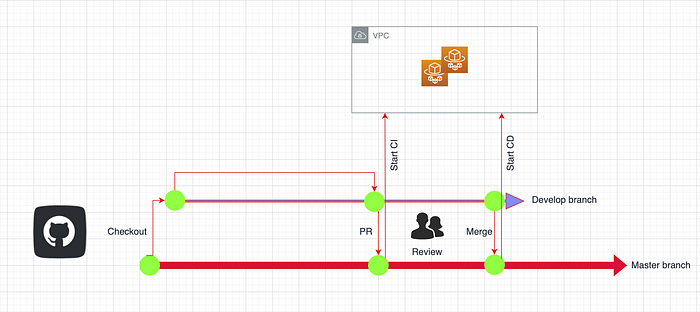

GitHub Actions: When a code is committed to GitHub and Pull request is created, GitHub Actions are run with self-host runner and CI is started. There are following steps in CI:

- Lint check

- Terraform code format check

- Security check: checks if there is any security issue in the changed application Terraform file

- Terraform code validation check

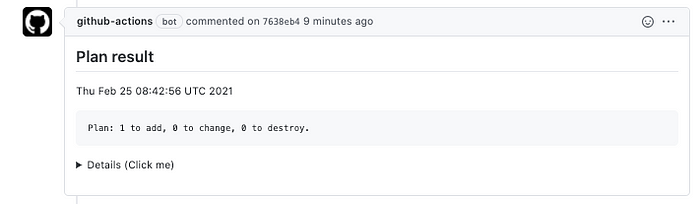

- Terraform plan: to see what changes will occur

- Create notification to GitHub Comment about changes in AWS services in application environment

If you merge the code to the main branch, CD starts in self-host runners. CD includes following steps:

- Terraform apply: apply the code and make changes to the infrastructure environment

- Send notification to Slack channel about changes in services after terraform apply finished so that your team can see what services have been added, removed or changed

S3 bucket: in order to keep terraform state file, S3 bucket is used. Self-host runner accesses to this bucket and understands the current state of the application environment and conducts CI-CD.

Starting to Build Infra-CI-CD

Source Code is in GitHub.

Setting environment variables

Firstly, in order to enable Terraform access AWS account and to create self-host runner, we should set environment variables as follows:

export PAT={YOUR_GITHUB_PERSONAL_TOKEN_HERE}

export ORG={YOUR_GITHUB_ORG_HERE}

export REPO={YOUR_GITHUB_REPO_NAME_HERE}

export AWS_DEFAULT_REGION={YOUR_AWS_DEFAULT_REGION_HERE}

export AWS_SECRET_ACCESS_KEY={YOUR_AWS_SECRET_ACCESS_KEY_HERE}

export AWS_ACCESS_KEY_ID={YOUR_AWS_ACCESS_KEY_ID_HERE}

export AWS_ACCOUNT_ID={YOUR_AWS_ACCOUNT_ID_HERE}Terraform automatically checks above variables.

Setting backends for Terraform state files

In this stage, we should create two S3 buckets as backend: one for “Terraform state-file used to create Application environment” and another for “Terraform state-file used to create self-host-runner environment”. The settings of the backends have already been done in backend files in GitHub:

You should create buckets manually with the names “self-host-runner-bucket” and “app-infra-state-bucket” as defined in the files. If your default region is different from “ap-northeast-1”, do not forget to change default region in the files to your region.

Creating application environment

Let us create application environment. Pull the source code from the GitHub and run following codes:

# Initiate terraform

terraform init# Check which resources will be created

terraform plan# Create environment

terraform apply

Creating self-host runner

After creating application environment above, we switch to creating self-host runner and its running environment in the same AWS account. The code to create the runner and its running environment have already been written in “self-host-runner-ECS” folder in the same GitHub repository above. Enter the folder and run following codes:

# Initiate terraform

terraform init# Create environment

./tf_apply.sh

The above code creates an environment for self-host runner to run and ECR repository for us to push runner’s container. Now, we should create container and push it to ECR as follows:

# Login to registry

aws ecr get-login-password | docker login --username AWS --password-stdin $AWS_ACCOUNT_ID.dkr.ecr.ap-northeast-1.amazonaws.com# Create a container

docker build -f Dockerfile -q -t ecs-runner .docker tag ecs-runner $AWS_ACCOUNT_ID.dkr.ecr.ap-northeast-1.amazonaws.com/ecs-runner# Push the container to ECR

docker push $AWS_ACCOUNT_ID.dkr.ecr.ap-northeast-1.amazonaws.com/ecs-runner# Start the runner

aws ecs update-service --cluster ecs-runner-cluster --service ecs-runner-ecs-service --force-new-deployment

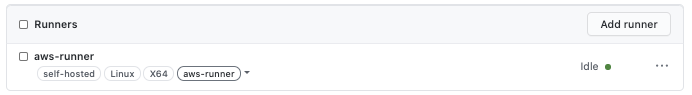

After running the code above, the self-host runners in AWS for GitHub Actions start in the form of Fargate instances (autoscaling: max=2 and min=1) and you should see it running in GitHub:

Setting GitHub Actions

Before creating workflow files, save the secrets below to your Repository’s GitHub Secrets:

1. SLACK_TOKEN

2. SLACK_CHANNEL_ID

3. SLACK_BOT_NAME

4. AWS_ACCOUNT_ID

5. GITHUB_TOKENFurthermore, enter to “.tfnotify” folder and fill in points below in “github.yml” file:

.......

owner: "{your org}"name: "{your repo name}"

........

In order GitHub Actions to run, you need workflow files. I have created workflow files for both CI and CD in “.github” folder. There you can see all of the jobs done in CI-CD pipeline. Just include these files as they are along with above run terraform files and push to your repository.

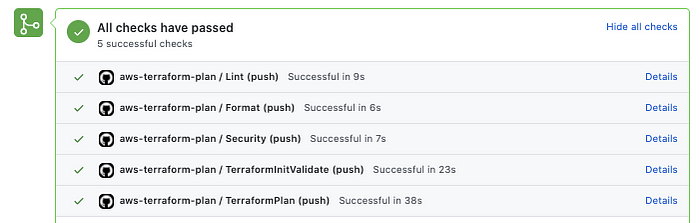

When you open pull request to main branch, CI runs as follows:

After CI finishes, you can see the terraform plan outcome in the GitHub Comments as follows:

If you merge the above PR request to Main branch, CD starts and terraform apply runs. After terraform apply finishes and services have been changed, deleted or added, you can see the notification about it in your Slack channel which you defined above.

YOUR PIPELINE IS READY. THAT IS IT!!!